Diffusion models in generative AI have changed the way we look at and approach creative content.

These are innovative models that have the ability to transform random noise into highly realistic and detailed outputs, ranging from stunning images to intricate musical compositions. Diffusion models in generative AI turn chaos into order and open up exciting possibilities in creative fields.

The working of Diffusion Models in Generative AI:

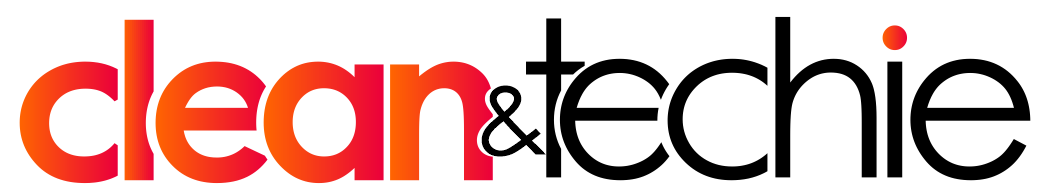

1. Forward processing

Noise is incrementally added to an image over multiple stages until it becomes entirely random.

2. Reverse Processing

The model works in the exact opposite way of the forward process, starting from progressively removing random noise to generate a new image.

Diffusion models in generative AI operate based on the above two processes. The forward process gradually adds noise to an image until it’s unrecognizable, corresponding to blurring a photo step-by-step. The reverse process, though, is the magic: It starts with random noise, and the model learns to reverse this process, progressively removing noise to generate a new, realistic image. It’s as good as restoring a heavily blurred photo to its original clarity.

Advantages of Diffusion Models

1. High-quality outputs

Diffusion models go beyond producing high-quality outputs by gradually removing noise from random data, resulting in detailed and realistic images that often surpass the quality of those generated by other methods.

2. Versatility

In diffusion models, versatility is handling various data types like images, audio, and text. Unlike models restricted to specific data, diffusion models can be adapted to generate diverse outputs, making them highly adaptable tools in AI.

3. Controllability

Diffusion models offer increasing control over generated output by manipulating the noise removal process, allowing for fine-tuning of image attributes like style, pose, or specific details, making them versatile for various creative and practical applications.

The top 6 applications of Diffusion Models in Generative AI

1. Text-to-image generation

Text-to-image generation is a remarkable application of diffusion models. It involves training a model on a massive dataset of image-text pairs to learn the relationship between visual and textual information.

2. Video and audio generation

Diffusion models extend their capabilities to video and audio generation. By processing sequential data, these models can create realistic videos and audio clips, from generating music to producing movie scenes. This versatility highlights the power of diffusion models across different data modalities.

3. Data augmentation

Diffusion models can generate diverse, realistic data, making them ideal for data augmentation. By creating synthetic training examples, these models enhance model performance and reduce overfitting, especially when real-world data is limited.

4. 3D model generation

Diffusion models can generate 3D models by iteratively refining noisy 3D data into detailed structures. This involves training the model on a vast dataset of 3D models to learn the underlying patterns and then generating new 3D objects based on given inputs or conditions.

5. Medical image analysis and Drug discovery

Medical Image Analysis: Diffusion models analyze medical images to detect anomalies, segment organs, and aid in diagnosis. For example, identifying tumors in X-rays or classifying diseases based on MRI scans.

Drug Discovery: By generating diverse molecular structures, diffusion models accelerate drug discovery. They predict molecule properties and interactions, aiding in the development of new treatments.

6. Natural language processing (NLP):

Diffusion models are emerging in Natural Language Processing (NLP) for tasks like text generation, translation, and summarization. By modeling text as data and applying noise and denoising processes, these models can generate human-like text, translate languages accurately, and condense information into concise summaries.

Conclusion

Diffusion models are designed to reverse the process of noise introduction, transforming random data into coherent structures. When applied to text-to-image generation, this capability enables the model to create highly detailed and realistic visuals based on textual descriptions. By iteratively refining the image through a noise reduction process guided by the text, diffusion models demonstrate remarkable proficiency in bridging the gap between language and visual representation. By combining the strengths of long horizon task planning for AI and generative modeling, a more intelligent and adaptable AI systems environment will be developed soon.

This core principle of noise-to-signal conversion is adaptable to various domains. In essence, diffusion models learn to reverse-engineer a process, moving from randomness to order. This versatility makes them promising candidates for addressing challenges in fields beyond image generation, such as natural language processing, where understanding and generating human-like text is a primary goal.